Difference between revisions of "CTK Documentation"

From Zhang Laboratory

(→Collapsing PCR duplicates) |

|||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

| − | + | [[File:CTK_Pipeline_Overview.png|500px|center]] | |

| − | Crosslinking induced mutation site | + | <center>'''[[CTK_usage|CTK usage]] | [[CTK_FAQ|FAQ]]'''</center> |

| + | |||

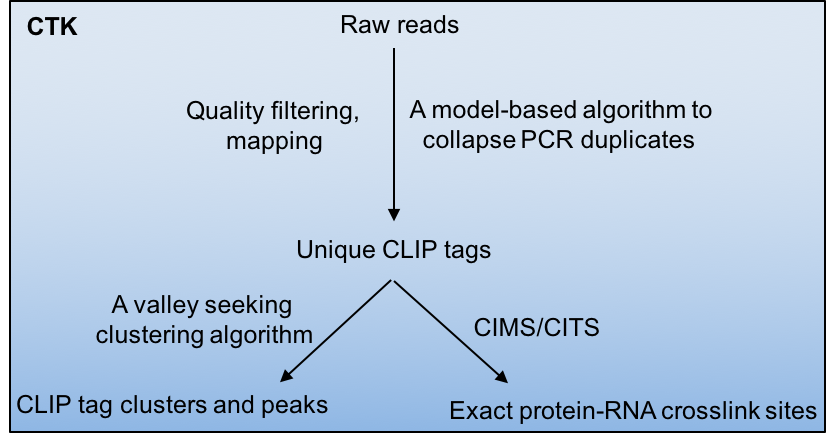

| + | Crosslinking and immunoprecipitation paired with highthroughput sequencing (HITS-CLIP or CLIP-Seq) has now been widely used to map protein-RNA interactions on a genome-wide scale. The CLIP Tool Kit (CTK) is a software package that provides a set of tools for analysis of CLIP data starting from the raw reads generated by the sequencer. It includes pipelines to filter and map reads, collapse PCR duplicates to obtain unique CLIP tags, define CLIP tag clusters and call peaks, and define the exact protein-RNA crosslink sites by CIMS and CITS analysis. This software package is an expansion of our previous CIMS package. | ||

| + | |||

| + | Crosslinking induced mutation site (CIMS) analysis is a computational method for CLIP-Seq data analysis to determine exact protein-RNA crosslink sites and thereby map protein-RNA interactions at single-nucleotide resolution. This method is based on the observation that UV cross linked amino-acid-RNA adducts introduce reverse transcription errors in cDNAs at certain frequencies, which are captured by sequencing and subsequent comparison of CLIP tags with a reference genome. More details of the biochemical and computational aspects of CLIP-Seq can be found in the following references: | ||

<pre> | <pre> | ||

| Line 11: | Line 15: | ||

</pre> | </pre> | ||

| − | + | For crosslinking induced trunction analysis (CITS) described below, please refer to: | |

| − | For | + | |

<pre> | <pre> | ||

Weyn-Vanhentenryck,S.,M.*, Mele,A.*, Yan,Q.*, Sun,S., Farny,N., Zhang,Z., Xue,C., Herre,M., Silver,P.A., Zhang,M.Q., Krainer,A.R., Darnell,R.B. †, Zhang,C. † 2014. HITS-CLIP and integrative modeling define the Rbfox splicing-regulatory network linked to brain development and autism. Cell Rep. 10.1016/j.celrep.2014.02.005. | Weyn-Vanhentenryck,S.,M.*, Mele,A.*, Yan,Q.*, Sun,S., Farny,N., Zhang,Z., Xue,C., Herre,M., Silver,P.A., Zhang,M.Q., Krainer,A.R., Darnell,R.B. †, Zhang,C. † 2014. HITS-CLIP and integrative modeling define the Rbfox splicing-regulatory network linked to brain development and autism. Cell Rep. 10.1016/j.celrep.2014.02.005. | ||

</pre> | </pre> | ||

| − | |||

| − | |||

=Versions= | =Versions= | ||

| − | *v1.0.0 ( 10-12-2015 ) | + | *v1.0.3 ( 08-08-2016 ), current |

| + | **improvement in software packaging and usage | ||

| + | *v1.0.0 ( 10-12-2015 ) | ||

**The initial beta release | **The initial beta release | ||

=Download= | =Download= | ||

| − | + | ==Source code== | |

| − | *czplib (perl): a perl library with various functions for genomic/bioinformatic analysis. | + | *czplib (perl): a perl library with various functions for genomic/bioinformatic analysis. [https://github.com/chaolinzhanglab/czplib download from github] |

| − | *CTK (perl): the core algorithm. | + | *CTK (perl): the core algorithm. [https://github.com/chaolinzhanglab/ctk download from github] |

| − | |||

| − | == | + | ==Sample dataset== |

| − | + | '''Raw FASTQ files:''' | |

| − | + | In this documentation, we will use the sample mouse brain Rbfox1-3 CLIP data (Weyn-Vanhentenryck et al. Cell Reports 2014) as a guide. Two protocols (standard CLIP and BrdU-CLIP) were used to generate this dataset. | |

| − | + | ||

| − | + | ||

| − | = | + | The raw sequence files from Illumina sequencing can be downloaded from SRA (http://www.ncbi.nlm.nih.gov/sra/?term=SRP035321), and we assume FASTQ files have been generated using the sra toolkit (http://www.ncbi.nlm.nih.gov/Traces/sra/sra.cgi?cmd=show&f=software&m=software&s=software) and the following files Fox1_1.fastq.gz, Fox1_2.fastq.gz, Fox1_3.fastq.gz...Fox3_5.fastq.gz can be moved to the directory called ''fastq'' under the ''test'' folder in home directory (i.e., ~/test is our working directory). Fox1_1.fastq.gz, Fox2_1.fastq.gz, and Fox3_1.fastq.gz were generated from the Standard CLIP protocol, and the rest were generated from the BrdU CLIP protocol. |

| − | |||

| − | + | '''Download sample output files of major steps here:''' | |

| + | *[http://zhanglab.c2b2.columbia.edu/data/CTK/preprocess.tar.gz Fully preprocessed FASTQ files] : output files generated for use right before mapping. The samples have been quality filtered, trimmed of 3' linker sequences, had exact duplicates collapsed, and barcodes stripped. | ||

| + | |||

| + | *[http://zhanglab.c2b2.columbia.edu/data/CTK/unique.tar.gz Unique CLIP tag and mutation files] : output files generated after mapping and collapsing PCR duplicates. | ||

| + | |||

| + | *[http://zhanglab.c2b2.columbia.edu/data/CTK/CIMS.tar.gz CIMS output files] : output files after CIMS analysis. | ||

| + | |||

| + | *[http://zhanglab.c2b2.columbia.edu/data/CTK/CITS.tar.gz CITS output files] : output files after CITS analysis. | ||

| + | |||

| + | =Prerequisites= | ||

| + | |||

| + | This software is implemented in perl. It also relies on several standard linux/unix tools such as grep, cat, sort, etc. We have tested the software on RedHat Linux (Linux 2.6.32-504.8.1.el6.x86_64), although it is expected to work on most unix-like systems, including Mac OS X. In addition, several software packages are required by the pipeline for sequence preprocessing and alignment (the version number in our test is also indicated). | ||

| + | |||

| + | *FASTX Tool-Kit Version 0.0.13: http://hannonlab.cshl.edu/fastx_toolkit/download.html | ||

| + | *Burrows Wheeler Aligner (BWA) Version 0.7.12: http://bio-bwa.sourceforge.net/ | ||

| + | *Samtools Version 1.3.1: http://samtools.sourceforge.net | ||

| + | *Perl Version 5.14.3 was used for testing, but we expect that newer versions of Perl will also be compatible: https://www.perl.org/get.html | ||

| + | *Perl library Math::CDF Version 0.1: http://search.cpan.org/~callahan/Math-CDF-0.1/CDF.pm | ||

| + | |||

| + | =Installation= | ||

| + | |||

| + | * Download and install software packages described in prerequisites. | ||

| + | * Download the czplib perl library files (refer back to '''Download''' section above) | ||

| + | * Decompress and move to whatever directory you like (as an example, we use /usr/local/lib/) | ||

| + | * Replace "x.tgz" below with the version of the package you downloaded | ||

<pre> | <pre> | ||

$tar zxvf czplib.v1.0.x.tgz | $tar zxvf czplib.v1.0.x.tgz | ||

| − | $mv czplib /usr/local/lib | + | $mv czplib /usr/local/lib/ |

</pre> | </pre> | ||

| Line 57: | Line 80: | ||

</pre> | </pre> | ||

| − | * Download CTK | + | * Download CTK code and likewise decompress and move to whatever directory you like (as an example, we use /usr/local/) |

| − | + | ||

<pre> | <pre> | ||

$tar zxvf CTK.v1.0.x.tgz | $tar zxvf CTK.v1.0.x.tgz | ||

$cd CTK | $cd CTK | ||

| − | $ | + | $cd .. |

| − | $mv CTK /usr/local/ | + | $mv CTK /usr/local/ |

</pre> | </pre> | ||

| − | Add the dir to your $PATH environment variable. | + | Add the dir to your $PATH environment variable if you would like. |

| + | |||

| + | Finally, some of the scripts will use a cache directory, which is under the working directory by default. One can specify another folder for cache using environment variable. | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | #e.g., add the following lines in .bash_profile | ||

| + | CACHEHOME=$HOME/cache | ||

| + | export CACHEHOME | ||

| + | </pre> | ||

=Read preprocessing= | =Read preprocessing= | ||

| − | Compared to previous | + | Compared to our previous software package, this new pipeline, in all preprocessing steps prior to read alignment, operates on FASTQ files to take advantage of sequence quality scores. |

| − | In our current experimental design, several CLIP libraries with different indexes are typically pooled together to be sequenced in one lane | + | ==Sample dataset== |

| + | In our current experimental design (BrdU-CLIP), several CLIP libraries with different indexes are typically pooled together to be sequenced in one lane. | ||

| − | == | + | The sample dataset we use as a guide (mouse brain Rbfox1-3 CLIP) was generated using the standard CLIP or BrdU-CLIP protocols (see the table below; see the Download section if you have not downloaded them). We assume FASTQ files have been generated using the sra toolkit (http://www.ncbi.nlm.nih.gov/Traces/sra/sra.cgi?cmd=show&f=software&m=software&s=software) and the following files Fox1_1.fastq.gz, Fox1_2.fastq.gz, Fox1_3.fastq.gz...Fox3_5.fastq.gz can be moved to the directory called ''fastq'' under the ''test'' folder in home directory (i.e., ~/test is our working directory). Fox1_1.fastq.gz, Fox2_1.fastq.gz, and Fox3_1.fastq.gz were generated from the Standard CLIP protocol, and the rest were generated from the BrdU CLIP protocol. |

| + | |||

| + | Reads from the standard CLIP protocol begin with a 5nt random barcode, followed by the actual cDNA tags of variable lengths, and possibly 3' adaptor sequences. | ||

| + | |||

| + | Reads from the BrdU-CLIP protocol begin with a 14 nucleotides that are sample index and random barcode (4 nt sample index+ a fixed base (either G or C)+ an 8-nt random barcode + a G), followed by the actual cDNA tags of variable lengths, and possibly 3' adaptor sequences. | ||

| + | |||

| + | |||

| + | The number of reads in each file, after each step of processing, is summarized in Table 1 below. | ||

| + | {| class="wikitable" | ||

| + | |+ Table 1: Summary of read numbers analyzed by CTK. | ||

| + | ! Protein | ||

| + | ! Sample | ||

| + | ! CLIP protocol | ||

| + | ! # of raw reads | ||

| + | ! # of filtered reads | ||

| + | ! # of trimmed reads | ||

| + | ! # of collapsed reads | ||

| + | ! # of mapped reads | ||

| + | ! # of unique tags | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_1 | ||

| + | | Standard | ||

| + | | 128,502,865 | ||

| + | | 124,979,444 | ||

| + | | 113,958,244 | ||

| + | | 4,953,805 | ||

| + | | 3,713,799 | ||

| + | | 357,094 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_2 | ||

| + | | BrdU | ||

| + | | 42,373,740 | ||

| + | | 39,121,986 | ||

| + | | 35,683,047 | ||

| + | | 1,465,789 | ||

| + | | 1,098,974 | ||

| + | | 94,757 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_3 | ||

| + | | BrdU | ||

| + | | 36,103,020 | ||

| + | | 32,500,181 | ||

| + | | 29,682,907 | ||

| + | | 2,112,635 | ||

| + | | 1,468,725 | ||

| + | | 161,487 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_4 | ||

| + | | BrdU | ||

| + | | 64,528,510 | ||

| + | | 52,712,783 | ||

| + | | 48,292,964 | ||

| + | | 7,025,945 | ||

| + | | 5,262,144 | ||

| + | | 1,194,617 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_1 | ||

| + | | Standard | ||

| + | | 135,171,343 | ||

| + | | 131,229,217 | ||

| + | | 121,238,246 | ||

| + | | 5,406,496 | ||

| + | | 3,716,401 | ||

| + | | 295,180 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_2 | ||

| + | | BrdU | ||

| + | | 42,744,272 | ||

| + | | 39,351,367 | ||

| + | | 35,005,375 | ||

| + | | 1,314,878 | ||

| + | | 968,115 | ||

| + | | 83,358 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_3 | ||

| + | | BrdU | ||

| + | | 31,512,102 | ||

| + | | 28,530,199 | ||

| + | | 25,877,412 | ||

| + | | 2,356,210 | ||

| + | | 1,581,335 | ||

| + | | 337,811 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_4 | ||

| + | | BrdU | ||

| + | | 46,383,375 | ||

| + | | 40,317,796 | ||

| + | | 29,418,988 | ||

| + | | 2,761,500 | ||

| + | | 1,803,408 | ||

| + | | 165,645 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_1 | ||

| + | | Standard | ||

| + | | 181,578,804 | ||

| + | | 173,884,893 | ||

| + | | 162,979,630 | ||

| + | | 10,405,177 | ||

| + | | 7,742,410 | ||

| + | | 877,256 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_2 | ||

| + | | BrdU | ||

| + | | 38,828,880 | ||

| + | | 35,983,470 | ||

| + | | 27,171,512 | ||

| + | | 1,185,180 | ||

| + | | 857,925 | ||

| + | | 143,522 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_3 | ||

| + | | BrdU | ||

| + | | 31,774,690 | ||

| + | | 29,095,065 | ||

| + | | 26,332,663 | ||

| + | | 2,295,078 | ||

| + | | 1,669,958 | ||

| + | | 399,878 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_4 | ||

| + | | BrdU | ||

| + | | 32,658,459 | ||

| + | | 29,720,851 | ||

| + | | 21,737,823 | ||

| + | | 2,017,979 | ||

| + | | 1,479,115 | ||

| + | | 413,847 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_5 | ||

| + | | BrdU | ||

| + | | 58,175,879 | ||

| + | | 47,324,888 | ||

| + | | 42,941,116 | ||

| + | | 6,200,102 | ||

| + | | 4,551,275 | ||

| + | | 1,076,782 | ||

| + | |- | ||

| + | | '''Total''' | ||

| + | | | ||

| + | | | ||

| + | | '''870,706,469''' | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | | '''5,601,234''' | ||

| + | |} | ||

| + | |||

| + | ==Demultiplexing samples == | ||

| + | |||

| + | The files downloaded from SRA have already be demultiplexed. Therefore the next step has already been performed. It is included for illustration purposes for the user who would like to start from a FASTQ file consisting of multiple libraries. | ||

| − | |||

<pre> | <pre> | ||

| − | + | cd ~/test/fastq | |

| − | cd | + | |

</pre> | </pre> | ||

| + | |||

| + | Assuming that we have six samples with indexes GTCA, GCATG, ACTG, AGCT, GCATC, TCGA, the following script will extract reads for each library using a simple loop. | ||

<pre> | <pre> | ||

| − | #! | + | #!/bin/bash |

| + | #For illustration | ||

| + | |||

index=(GTCA GCATG ACTG AGCT GCATC TCGA); | index=(GTCA GCATG ACTG AGCT GCATC TCGA); | ||

samples=(sample1 sample2 sample3 sample4 sample5 sample6); | samples=(sample1 sample2 sample3 sample4 sample5 sample6); | ||

| Line 91: | Line 289: | ||

idx=${index[$i]} | idx=${index[$i]} | ||

name=${samples[$i]} | name=${samples[$i]} | ||

| − | perl | + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:$idx -of fastq raw.fastq.gz - | gzip -c > $name.fastq.gz |

done | done | ||

</pre> | </pre> | ||

| Line 99: | Line 297: | ||

<pre> | <pre> | ||

| − | #! | + | #!/bin/bash |

#$ -t 1-6 -m a -cwd -N CLIP | #$ -t 1-6 -m a -cwd -N CLIP | ||

| − | # | + | #For illustration |

index=(GTCA GCATG ACTG AGCT GCATC TCGA); | index=(GTCA GCATG ACTG AGCT GCATC TCGA); | ||

| Line 109: | Line 307: | ||

idx=${index[$SGE_TASK_ID-1]} | idx=${index[$SGE_TASK_ID-1]} | ||

name=${samples[$SGE_TASK_ID-1]} | name=${samples[$SGE_TASK_ID-1]} | ||

| − | perl | + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:$idx -of fastq raw.fastq.gz - | gzip -c > $name.fastq.gz |

</pre> | </pre> | ||

| − | + | The script /usr/local/CTK/fastq_filter.pl will extract reads with indicated index sequence (e.g., GTCA) starting from position 0 (specified by the -index option) in the read. | |

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#fastq_filter.pl|fastq_filter.pl]] | ||

| + | |||

| + | '''Note 1''': This script can also be used to demultiplex samples from other CLIP protocols as long as the position relative to the read start is fixed. | ||

| + | |||

| + | '''Note 2''': To get usage information, just run the script without any parameters (the same for the other scripts in the package). | ||

| + | |||

| + | ==Read quality filtering== | ||

<pre> | <pre> | ||

| + | cd ~/test | ||

| + | mkdir filtering | ||

| + | cd filtering | ||

| + | </pre> | ||

| + | |||

| + | The same fastq_filter.pl script will extract reads passing quality filters for each library (of standard CLIP-seq data) using a simple loop. | ||

| + | |||

| + | <pre> | ||

| + | #!/bin/bash | ||

| + | |||

| + | for f in Fox1_1 Fox2_1 Fox3_1 | ||

| + | do | ||

| + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-29:20 -of fastq ../fastq/$f.fastq.gz $f.fastq | ||

| + | done | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | This is the same thing, but using a Sun Grid Engine to execute this UNIX job on a remote machine. | ||

| + | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-3 -m a -cwd -N CLIP | ||

| + | |||

| + | files=(Fox1_1 Fox2_1 Fox3_1); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| − | perl | + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-29:20 -of fastq ../fastq/$f.fastq.gz $f.fastq |

</pre> | </pre> | ||

| − | + | This script filters raw reads based on quality scores. -f mean:0-29-20 specifies a mean score of 20 or above in the first 30 bases (i.e., positions 0-29 for Standard CLIP), which includes 5 positions with sample indexes and the random barcode, followed by 25 positions with the actual CLIP tag. | |

| − | + | The filtering should be run again on the BrdU CLIP (or iCLIP) samples slightly differently. | |

<pre> | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-10 -m a -cwd -N CLIP | ||

| − | + | files=(Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | |

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-38:20 -of fastq ../fastq/$f.fastq.gz $f.fastq | ||

</pre> | </pre> | ||

| − | + | -f mean:0-38-20 specifies a mean score of 20 or above in the first 39 bases (i.e., positions 0-38 for BrdU CLIP), which includes 14 positions with sample indexes and the random barcode, followed by 25 positions with the actual CLIP tag. | |

| − | '''Note | + | The reason we filter as such is because low quality reads can introduce mapping errors and background. They will inflate the number of unique tags after removal of PCR duplicates. |

| + | |||

| + | '''Note 1:''' The reason we used the first four positions of the index for demultiplexing is due to historical confusion about the identity of the index sequences. It really should be 5nt, but it does not make a substantial difference. | ||

| + | |||

| + | '''Note 2:''' As a good practice, always check the number of reads in the raw files and compare the number of reads left after filtering to confirm if the numbers are what you would expect. This can be done by running the command: | ||

| + | |||

| + | <pre> | ||

| + | #for raw reads | ||

| + | zcat ../fastq/*.fastq.gz | wc -l | ||

| + | |||

| + | #for filtered reads | ||

| + | wc -l *.fastq | ||

| + | </pre> | ||

| + | |||

| + | The '''wc''' command will give the number of lines (divide by 4 to get the actual read number). | ||

| + | |||

| + | '''Note 3:''' Demultiplexing and quality filtering can be combined into a single step by specifying -index and -f at the same time. | ||

| + | |||

| + | <pre> | ||

| + | perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:GTCA -f mean:0-38:20 -of fastq ../fastq/in.fastq.gz - | gzip -c > out.fastq.gz | ||

| + | </pre> | ||

==Trimming of 3' linker sequences== | ==Trimming of 3' linker sequences== | ||

| − | + | For long reads that are common now, collapsing before trimming is not very helpful. Therefore, we trim the 3' linker first (which can vary depending on the specific CLIP protocol performed). | |

| − | For each sample, run the following command using tools provided by the | + | For each sample, run the following command using tools provided by the Fastx Toolkit. |

<pre> | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-3 -m a -cwd -N CLIP | ||

| + | #-l "mem=2G,time=192::" | ||

| − | + | files=(Fox1_1 Fox2_1 Fox3_1); | |

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | fastx_clipper -a GTGTCAGTCACTTCCAGCGG -l 20 -n -i $f.fastq | fastq_quality_trimmer -t 5 -l 20 -o $f.trim.fastq #note that the 3' linker will vary for other CLIP protocol variations. | ||

</pre> | </pre> | ||

This command will remove the 3' linker and/or also remove extremely low quality bases (score < 5). | This command will remove the 3' linker and/or also remove extremely low quality bases (score < 5). | ||

| − | + | You can do the same for the BrdU CLIP files: | |

<pre> | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-10 -m a -cwd -N CLIP | ||

| + | #-l "mem=2G,time=192::" | ||

| + | files=(Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| − | wc -l | + | fastx_clipper -a TCGTATGCCGTCTTCTGCTTG -l 29 -n -i $f.fastq | fastq_quality_trimmer -t 5 -l 29 -o $f.trim.fastq |

| + | |||

| + | </pre> | ||

| + | |||

| + | For fastx_clipper usage information: | ||

| + | <pre> | ||

| + | fastx_clipper -h | ||

| + | </pre> | ||

| + | |||

| + | '''Note 1:''' It is good to check the number of reads by running the command: | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | wc -l *.trim.fastq | ||

</pre> | </pre> | ||

| Line 163: | Line 440: | ||

<pre> | <pre> | ||

| − | perl | + | #!/bin/bash |

| + | #$ -t 1-13 -m a -cwd -N CLIP | ||

| + | files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | |||

| + | perl /usr/local/CTK/fastq2collapse.pl $f.trim.fastq $f.trim.c.fastq | ||

</pre> | </pre> | ||

| − | + | *Usage and additional explanation of [[CTK_usage#fastq2collapse.pl|fastq2collapse.pl]]. | |

| − | + | '''Note 1:''' It is good to check the number of reads by running the command: | |

<pre> | <pre> | ||

| + | wc -l *.trim.c.fastq | ||

| + | </pre> | ||

| − | + | ==Strip barcode== | |

| + | The following script removes the 5' degenerate barcode. | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | #The barcode for standard CLIP is 5 nucleotides long | ||

| + | for f in Fox1_1 Fox2_1 Fox3_1 | ||

| + | do | ||

| + | perl /usr/local/CTK/stripBarcode.pl -format fastq -len 5 $f.trim.c.fastq $f.trim.c.tag.fastq | ||

| + | done | ||

| + | |||

| + | #the barcode for BrdU CLIP is 14 nucleotides long | ||

| + | for f in Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5 | ||

| + | do | ||

| + | perl /usr/local/CTK/stripBarcode.pl -format fastq -len 14 $f.trim.c.fastq $f.trim.c.tag.fastq | ||

| + | done | ||

</pre> | </pre> | ||

| + | |||

| + | |||

| + | '''Note 1:''' We include sample index as part of the random barcode here. | ||

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#stripBarcode.pl|stripBarcode.pl]]. | ||

| + | |||

After this step, one can get the distribution of tag length for diagnostic purposes. | After this step, one can get the distribution of tag length for diagnostic purposes. | ||

| Line 181: | Line 486: | ||

<pre> | <pre> | ||

| − | awk '{if(NR%4==2) {print $0}}' | + | awk '{if(NR%4==2) {print $0}}' $f.trim.c.tag.fastq | awk '{print length($0)}' | sort -n | uniq -c | awk '{print $2"\t"$1}' > $f.trim.c.tag.seqlen.stat.txt |

| + | </pre> | ||

| + | |||

| + | [http://zhanglab.c2b2.columbia.edu/data/CTK/preprocess.tar.gz You can download fully preprocessed FASTQ files here ] or alternatively use command: | ||

| + | |||

| + | <pre> | ||

| + | wget http://zhanglab.c2b2.columbia.edu/data/CTK/preprocess.tar.gz | ||

| + | tar -zxvf preprocess.tar.gz | ||

</pre> | </pre> | ||

=Read mapping & parsing= | =Read mapping & parsing= | ||

| − | + | We are now using BWA (version 0.7.12) for alignment instead of novoalign for two reasons: | |

| + | * novoalign is slower than some of the other algorithms that becomes available, in part because the academic version of novoalign does not allow multi threading. | ||

| + | * BWA allows one to specify mismatch rate instead of the the absolute number, which is more appropriate for tags of different sizes (i.e. a smaller number of mismatches allowed for shorter tags after trimming). | ||

| − | + | ==Indexing reference genome == | |

| − | + | ||

| − | + | This step needs to be done only once. | |

| + | |||

| + | After you have installed BWA, prepare a reference genome: | ||

| + | |||

| + | For example, build a reference mm10 genome. Download the reference genome here: http://ccb.jhu.edu/software/tophat/igenomes.shtml. In this case, make sure you are downloading the "''Mus musculus'' UCSC MM10" reference. | ||

| + | <pre> | ||

| + | wget ftp://igenome:G3nom3s4u@ussd-ftp.illumina.com/Mus_musculus/UCSC/mm10/Mus_musculus_UCSC_mm10.tar.gz | ||

| + | tar -xvf Mus_musculus_UCSC_mm10.tar.gz | ||

| + | cd /Mus_musculus_UCSC_mm10/Mus_musculus/UCSC/mm10/Sequence/Chromosomes | ||

| + | </pre> | ||

| + | |||

| + | Change the chromosome header and combine the chromosomes into a full genome. Note that we exclude random chromosomes and the mitochondria chromosome in our analysis. | ||

| + | <pre> | ||

| + | sed -i -- "s/chr//g" *.fa | ||

| + | cat ch1.fa chr2.fa chr3.fa ... chrX.fa chrY.fa > mm10.fa | ||

| + | #The chromosomes included in the index should not include random chromosomes or chrM. | ||

| + | </pre> | ||

| + | |||

| + | Finally, create a BWA index and move it to a directory you like. In this example, the index is in the /genomes/mm10/bwa/ directory. | ||

| + | <pre> | ||

| + | cd /genomes/mm10/bwa/ | ||

| + | bwa index -a bwtsw mm10.fa | ||

| + | </pre> | ||

| + | |||

| + | ==Read mapping== | ||

| + | Now that the reference index has been prepared, you can proceed with mapping/alignment. | ||

<pre> | <pre> | ||

| + | cd ~/test | ||

mkdir mapping | mkdir mapping | ||

cd mapping | cd mapping | ||

| − | |||

| − | bwa | + | </pre> |

| + | |||

| + | Run bwa to align the reads to the reference genome. | ||

| + | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-13 -m a -cwd -N CLIP | ||

| + | files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | |||

| + | bwa aln -t 4 -n 0.06 -q 20 /genomes/mm10/bwa/mm10.fa ../filtering/$f.trim.c.tag.fastq > $f.sai | ||

| + | bwa samse /genomes/mm10/bwa/mm10.fa $f.sai ../filtering/$f.trim.c.tag.fastq > $f.sam | ||

| + | |||

| + | #the two steps can also be combined, using the following command: | ||

| + | #bwa aln -t 4 -n 0.06 -q 20 /genomes/mm10/bwa/mm10.fa ../filtering/$f.trim.c.tag.fastq | bwa samse /genomes/mm10/bwa/mm10.fa - ../filtering/$f.trim.c.tag.fastq > $f.sam | ||

</pre> | </pre> | ||

| − | The option -n 0.06 specifies slightly more stringent criteria than the default. The number of allowed mismatches (substitutions or indels) is as follows: | + | The option -n 0.06 specifies slightly more stringent criteria than the default. The number of allowed mismatches (substitutions or indels) depending on read length is as follows: |

<pre> | <pre> | ||

| Line 224: | Line 576: | ||

<pre> | <pre> | ||

| − | perl | + | #!/bin/bash |

| + | #$ -t 1-13 -m a -cwd -N CLIP | ||

| + | files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | |||

| + | perl /usr/local/CTK/parseAlignment.pl -v --map-qual 1 --min-len 18 --mutation-file $f.mutation.txt $f.sam $f.tag.bed | ||

</pre> | </pre> | ||

| + | |||

This will keep only unique mappings (with MAPQ >=1) and a minimal mapping size of 18 nt. | This will keep only unique mappings (with MAPQ >=1) and a minimal mapping size of 18 nt. | ||

| − | '''Note 1:''' | + | '''Note 1:''' In the tag bed file, the 5′ column records the number of mismatches (substitutions) in each read |

| + | |||

| + | '''Note 2:''' Other aligners might not use a positive MAPQ as an indication of unique mapping. | ||

| − | Another useful option is --indel-to-end, which specifies the number of nucleotides towards the end from which indels should not be called. | + | Another useful option is --indel-to-end, which specifies the number of nucleotides towards the end from which indels should not be called (default=5 nt). |

| − | '''Note | + | '''Note 3:''' The parsing script relies on MD tags, which is an optional field without strict definition in SAM file format specification. Some aligners might have slightly different format how they report mismatches. If other aligners than bwa is used, one should run the following command: |

<pre> | <pre> | ||

| − | samtools view -bS | + | samtools view -bS $f.sam | samtools sort - $f.sorted |

| − | samtools fillmd | + | samtools fillmd $f.sorted.bam /genomes/mm10/bwa/mm10.fa > $f.sorted.md.sam |

</pre> | </pre> | ||

| Line 245: | Line 605: | ||

This will ensure the sam file gets parsed properly. | This will ensure the sam file gets parsed properly. | ||

| − | '''Note | + | *Usage and additional explanation of [[CTK_usage#parseAlignment.pl|parseAlignment.pl]]. |

| + | |||

| + | |||

| + | '''Note 4:''' Keep track what proportion of reads can be mapped uniquely. | ||

<pre> | <pre> | ||

| − | wc -l | + | wc -l *.tag.bed |

</pre> | </pre> | ||

| Line 259: | Line 622: | ||

<pre> | <pre> | ||

| + | #!/bin/bash | ||

| + | #$ -t 1-13 -m a -cwd -N CLIP | ||

| + | files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| − | perl | + | perl /usr/local/CTK/tag2collapse.pl -v -big --random-barcode -EM 30 --seq-error-model alignment -weight --weight-in-name --keep-max-score --keep-tag-name $f.tag.bed $f.tag.uniq.bed |

</pre> | </pre> | ||

| − | + | *Usage and additional explanation of [[CTK_usage#tag2collapse.pl|tag2collapse.pl]]. | |

| + | '''Note 1:''' Sequencing errors in the degenerate barcodes are estimated from results of read alignment. In addition, the number of substitutions must be provided in the 5th column. Note that the read ID in the 4th column must take the form READ#x#NNNNN, where x is the number of exact duplicates and NNNNN is the bar-code nucleotide sequence appended to read IDs in previous steps. Read IDs that are not in this format will generate an error. | ||

| + | |||

| + | |||

| + | Get the mutations in unique tags | ||

<pre> | <pre> | ||

| − | + | #!/bin/bash | |

| + | #$ -t 1-13 -m a -cwd -N CLIP | ||

| + | files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5); | ||

| + | f=${files[$SGE_TASK_ID-1]} | ||

| + | |||

| + | perl /usr/local/CTK/selectRow.pl -q 3 -f 3 $f.mutation.txt $f.tag.uniq.bed > $f.tag.uniq.mutation.txt | ||

| + | |||

| + | #or alternatively, use the following python script | ||

| + | #python /usr/local/CTK/joinWrapper.py $f.mutation.txt $f.tag.uniq.bed 4 4 N $f.tag.uniq.mutation.txt | ||

| + | #the parameters 4 4 indicate the columns in the two input file used to join the two, and N indicates that only paired rows should be printed | ||

</pre> | </pre> | ||

| − | + | *Usage and additional explanation of [[CTK_usage#selectRow.pl|selectRow.pl]]. | |

| + | |||

| + | Table 2 summarizes the number of unique mutations of different types in each sample. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |+Table 2: Summary of mutations in unique tags. | ||

| + | ! Protein | ||

| + | ! Sample | ||

| + | ! CLIP protocol | ||

| + | ! # of unique tags | ||

| + | ! Deletions | ||

| + | ! Insertions | ||

| + | ! Substitutions | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_1 | ||

| + | | Standard | ||

| + | | 357,094 | ||

| + | | 30,675 | ||

| + | | 4,705 | ||

| + | | 112,837 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_2 | ||

| + | | BrdU | ||

| + | | 94,757 | ||

| + | | 2,742 | ||

| + | | 2,022 | ||

| + | | 29,840 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_3 | ||

| + | | BrdU | ||

| + | | 161,487 | ||

| + | | 7,348 | ||

| + | | 3,138 | ||

| + | | 47,372 | ||

| + | |- | ||

| + | | Fox1 | ||

| + | | Fox1_4 | ||

| + | | BrdU | ||

| + | | 1,194,617 | ||

| + | | 43,953 | ||

| + | | 11,012 | ||

| + | | 342,695 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_1 | ||

| + | | Standard | ||

| + | | 295,180 | ||

| + | | 26,791 | ||

| + | | 6,134 | ||

| + | | 103,992 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_2 | ||

| + | | BrdU | ||

| + | | 83,358 | ||

| + | | 1,192 | ||

| + | | 1,832 | ||

| + | | 25,128 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_3 | ||

| + | | BrdU | ||

| + | | 337,811 | ||

| + | | 10,911 | ||

| + | | 3,442 | ||

| + | | 75,912 | ||

| + | |- | ||

| + | | Fox2 | ||

| + | | Fox2_4 | ||

| + | | BrdU | ||

| + | | 165,645 | ||

| + | | 2,292 | ||

| + | | 5,009 | ||

| + | | 67,585 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_1 | ||

| + | | Standard | ||

| + | | 877,256 | ||

| + | | 51,536 | ||

| + | | 13,896 | ||

| + | | 27,1740 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_2 | ||

| + | | BrdU | ||

| + | | 143,522 | ||

| + | | 6,058 | ||

| + | | 2,059 | ||

| + | | 34,729 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_3 | ||

| + | | BrdU | ||

| + | | 399,878 | ||

| + | | 17,004 | ||

| + | | 3,499 | ||

| + | | 73,882 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_4 | ||

| + | | BrdU | ||

| + | | 413,847 | ||

| + | | 17,195 | ||

| + | | 3,153 | ||

| + | | 72,674 | ||

| + | |- | ||

| + | | Fox3 | ||

| + | | Fox3_5 | ||

| + | | BrdU | ||

| + | | 1,076,782 | ||

| + | | 38,700 | ||

| + | | 10,790 | ||

| + | | 374,446 | ||

| + | |- | ||

| + | | '''Total''' | ||

| + | | | ||

| + | | | ||

| + | | '''5,601,234''' | ||

| + | | '''256,397''' | ||

| + | | '''70,691''' | ||

| + | | '''1,632,832''' | ||

| + | |} | ||

| + | |||

| + | [http://zhanglab.c2b2.columbia.edu/data/CTK/unique.tar.gz You can download unique tag and mutation files here.] | ||

| + | |||

| + | After getting the unique tags of each library, one might concatenate biological replicates, which are distinguished by different colors. As an example: | ||

| + | |||

<pre> | <pre> | ||

| − | + | ||

| + | perl /usr/local/CTK/bed2rgb.pl -v -col "128,0,0" Fox1_1.tag.uniq.bed Fox1_1.tag.uniq.rgb.bed | ||

| + | ... | ||

| + | |||

| + | cat *tag.uniq.rgb.bed > Fox.pool.tag.uniq.rgb.bed | ||

| + | cat *tag.uniq.mutation.txt > Fox.pool.tag.uniq.mutation.txt | ||

| + | |||

</pre> | </pre> | ||

| + | *Usage and additional explanation of [[CTK_usage#bed2rgb.pl|bed2rgb.pl]]. | ||

| − | + | ||

| + | We also concatenate the BrdU CLIP BED files separately for CITS analysis downstream in this pipeline. | ||

<pre> | <pre> | ||

| + | cat Fox1_2.tag.uniq.rgb.bed Fox1_3.tag.uniq.rgb.bed Fox1_4.tag.uniq.rgb.bed Fox2_1.tag.uniq.rgb.bed Fox2_2.tag.uniq.rgb.bed Fox2_3.tag.uniq.rgb.bed Fox2_4.tag.uniq.rgb.bed Fox3_1.tag.uniq.rgb.bed Fox3_2.tag.uniq.rgb.bed Fox3_3.tag.uniq.rgb.bed Fox3_4.tag.uniq.rgb.bed Fox3_5.tag.uniq.rgb.bed > Fox_BrdU.pool.tag.uniq.rgb.bed | ||

| − | + | cat Fox1_2.tag.uniq.mutation.txt Fox1_3.tag.uniq.mutation.txt ... Fox3_5.tag.uniq.mutation.txt > Fox_BrdU.pool.tag.uniq.mutation.txt | |

| − | ... | + | </pre> |

| + | |||

| + | '''Note 1:''' As a diagnostic step, get the length distribution of unique tags, which should be a more faithful representation of the library: | ||

| + | |||

| + | <pre> | ||

| − | + | awk '{print $3-$2}' $f.tag.uniq.bed | sort -n | uniq -c | awk '{print $2"\t"$1}' > $f.uniq.len.dist.txt | |

| − | + | ||

</pre> | </pre> | ||

| − | '''Note | + | '''Note 2:''' get genomic distribution of CLIP tags |

<pre> | <pre> | ||

| − | + | perl /usr/local/CTK/bed2annotation.pl -dbkey mm10 -ss -big -region -v -summary Fox.pool.tag.uniq.annot.summary.txt Fox.pool.tag.uniq.rgb.bed Fox.pool.tag.uniq.annot.txt | |

| − | + | ||

</pre> | </pre> | ||

| − | Check the summary file for the percentage of tags mapped to CDS, 3'UTR, introns, etc. | + | Make sure the current genome (mm10) is specified (mm10 and hg19 are currently supported). |

| + | |||

| + | Check the summary file (<tag.uniq.annot.summary.txt>) for the percentage of tags mapped to CDS, 3'UTR, introns, etc. | ||

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#bed2annotation.pl|bed2annotation.pl]]. | ||

| + | |||

| + | '''Note 3:''' generate bedgraph for visualization in the genome browser: | ||

| + | |||

| + | <pre> | ||

| + | perl /usr/local/CTK/tag2profile.pl -v -ss -exact -of bedgraph -n ″Unique Tag Profile″ Fox.pool.tag.uniq.rgb.bed Fox.pool.tag.uniq.bedgraph | ||

| + | </pre> | ||

=Peak calling= | =Peak calling= | ||

| + | |||

| + | <pre> | ||

| + | cd ~/test | ||

| + | mkdir cluster | ||

| + | cd cluster | ||

| + | ln -s ../mapping/Fox.pool.tag.uniq.bed | ||

| + | </pre> | ||

| + | |||

| + | CTK provides two modes of peak calling. The first mode is peak calling without statistical assessment, which will find all potential peaks. The second mode is to find peaks with peak height more than one expected by chance. | ||

==Mode 1: Peak calling without statistical assessment== | ==Mode 1: Peak calling without statistical assessment== | ||

<pre> | <pre> | ||

| − | perl | + | perl usr/local/CTK/tag2peak.pl -big -ss -v --valley-seeking --valley-depth 0.9 Fox.pool.tag.uniq.bed Fox.pool.tag.uniq.peak.bed --out-boundary Fox.pool.tag.uniq.peak.boundary.bed --out-half-PH Fox.pool.tag.uniq.peak.halfPH.bed |

</pre> | </pre> | ||

| Line 310: | Line 850: | ||

This script searches for all potential peaks using a "valley seeking" algorithm. A peak is called if valleys of certain depths are found on both sides so that it is separated from other peaks. The results are similar to those generated by the original clustering algorithm. However, this valley seeking algorithm is advantageous because it is able to separate neighboring peaks without zero-tag gaps. | This script searches for all potential peaks using a "valley seeking" algorithm. A peak is called if valleys of certain depths are found on both sides so that it is separated from other peaks. The results are similar to those generated by the original clustering algorithm. However, this valley seeking algorithm is advantageous because it is able to separate neighboring peaks without zero-tag gaps. | ||

| − | |||

| − | One also has the option to merge peaks close to each other by specifying the distance between the peaks (e.g., -gap 20). | + | '''Note 1:''' To annotate peaks with overlapping genes and repeat masked sequences and get genomic breakdown: |

| + | |||

| + | <pre> | ||

| + | perl /usr/local/CTK/bed2annotation.pl -dbkey mm10 -ss -big -region -v -summary Fox.pool.tag.uniq.peak.annot.summary.txt Fox.pool.tag.uniq.peak.bed Fox.pool.tag.uniq.peak.annot.txt | ||

| + | </pre> | ||

| + | |||

| + | The output file Fox.pool.tag.uniq.peak.annot.txt has the detailed annotation for each peak, and Fox.pool.tag.uniq.peak.annot.summary.txt has summary statistics. | ||

| + | |||

| + | '''Note 1:''' Another useful option --valley-depth specifies the depth of the valley relative to the peak (0-1, 0.5 by default). One also has the option to merge peaks close to each other by specifying the distance between the peaks (e.g., -gap 20). | ||

| + | |||

| + | '''Note 2:''' Besides the peak boundaries (Fox.pool.tag.uniq.peak.bed), one can also output cluster boundaries (--out-boundary) and half peak boundaries (--out-half-PH ) associated with each peak. | ||

| + | |||

| + | The default is set to a valley depth of 0.9. | ||

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#tag2peak.pl|tag2peak.pl]]. | ||

| + | |||

In this mode, the script will not assess the statistical significance of the peak height. If one needs this, an alternative mode is as follows: | In this mode, the script will not assess the statistical significance of the peak height. If one needs this, an alternative mode is as follows: | ||

| Line 320: | Line 874: | ||

<pre> | <pre> | ||

| − | perl | + | perl /usr/local/CTK/tag2peak.pl -big -ss -v --valley-seeking -p 0.05 --valley-depth 0.9 --multi-test -gene ~/data/genomes/mm10/annotation/refGene_knownGene.meta.bed Fox.pool.tag.uniq.bed Fox.pool.tag.uniq.peak.sig.bed --out-boundary Fox.pool.tag.uniq.peak.sig.boundary.bed --out-half-PH Fox.pool.tag.uniq.peak.sig.halfPH.bed |

</pre> | </pre> | ||

| Line 328: | Line 882: | ||

It is important to note that in this mode, only clusters overlapping with annotated genes are kept. | It is important to note that in this mode, only clusters overlapping with annotated genes are kept. | ||

| − | In both cases, the three output files | + | In both cases, the three output files provide the positions of peak (many times it is 1nt, but not always), the peak width at half peak height, and the boundaries of each peak. In practice, it appears that the center of peak or half-peak provides the most robust/precise indicator of the binding sites (although a shift might be present because of CITS, which can be adjusted after one examines the enrichment of the motif). |

| − | + | ||

| − | + | ||

<pre> | <pre> | ||

| + | wc -l Fox.pool.tag.uniq.peak.sig.bed | ||

| + | 29057 Fox.pool.tag.uniq.peak.sig.bed | ||

| + | </pre> | ||

| − | + | '''Note 1:''' To search for a known binding motif, one first defines the center of each peak (based on width at half PH). | |

| − | + | ||

| − | + | ||

| − | + | <pre> | |

| + | awk '{print $1"\t"int(($2+$3)/2)-500"\t"int(($2+$3)/2)+500"\t"$4"\t"$5"\t"$6}' Fox.pool.tag.uniq.peak.sig.halfPH.bed > Fox.pool.tag.uniq.peak.sig.halfPH.center.ext1k.bed | ||

| + | #+/-500 around peak center | ||

</pre> | </pre> | ||

| − | '''Note 2:'''One might want to count the number of tags | + | This bed file can be used to extract sequences around peak (pay attention to the strand), and search for enrichment of specific motif (e.g., UGCAUG) relative to the peak. |

| + | |||

| + | '''Note 2:'''One might want to count the number of tags overlapping with each cluster/peak for each sample (e.g., to evaluate correlation between replicates). | ||

<pre> | <pre> | ||

| + | perl /usr/local/CTK/tag2profile.pl -ss -region Fox.pool.tag.uniq.peak.sig.boundary.bed -of bed -v Fox.tag.uniq.bed Fox.tag.uniq.peak.sig.boundary.count.bed | ||

| + | </pre> | ||

| − | + | *Usage and additional explanation of [[CTK_usage#tag2profile.pl|tag2profile.pl]]. | |

| − | + | [http://zhanglab.c2b2.columbia.edu/data/CTK/peak_calling.tar.gz Download input and output files for peak calling here.] | |

=CIMS analysis= | =CIMS analysis= | ||

| + | |||

| + | |||

| + | |||

| + | Here we go back to the individual Fox files, such as Fox1_1.tag.uniq.bed and Fox1_1.tag.uniq.mutation.txt, and symbolically link them here: | ||

<pre> | <pre> | ||

| + | cd ~/test | ||

mkdir CIMS | mkdir CIMS | ||

cd CIMS | cd CIMS | ||

| − | ln -s ../mapping/ | + | ln -s ../mapping/Fox1_1.tag.uniq.mutation.txt ./ |

| − | ln -s ../mapping/ | + | ln -s ../mapping/Fox1_1.tag.uniq.bed ./ |

| + | ... | ||

| + | ln -s ../mapping/Fox3_5.tag.uniq.mutation.txt ./ | ||

| + | ln -s ../mapping/Fox3_5.tag.uniq.bed ./ | ||

</pre> | </pre> | ||

| Line 364: | Line 931: | ||

<pre> | <pre> | ||

| − | awk '{if($9==">") {print $0}}' | + | for f in Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5 |

| − | awk '{if($9=="-") {print $0}}' | + | do |

| − | awk '{if($9=="+") {print $0}}' | + | awk '{if($9==">") {print $0}}' $f.tag.uniq.mutation.txt | cut -f 1-6 > $f.tag.uniq.sub.bed |

| + | awk '{if($9=="-") {print $0}}' $f.tag.uniq.mutation.txt | cut -f 1-6 > $f.tag.uniq.del.bed | ||

| + | awk '{if($9=="+") {print $0}}' $f.tag.uniq.mutation.txt | cut -f 1-6 > $f.tag.uniq.ins.bed | ||

| + | done | ||

| + | |||

| + | cat *tag.uniq.sub.bed > Fox.pool.tag.uniq.sub.bed | ||

| + | cat *tag.uniq.del.bed > Fox.pool.tag.uniq.del.bed | ||

| + | cat *tag.uniq.ins.bed > Fox.pool.tag.uniq.ins.bed | ||

</pre> | </pre> | ||

| Line 375: | Line 949: | ||

<pre> | <pre> | ||

| − | awk '{print $3-$2}' | + | awk '{print $3-$2}' Fox.pool.tag.uniq.del.bed | sort -n | uniq -c |

| + | |||

| + | 180787 1 | ||

| + | 74788 2 | ||

| + | 822 3 | ||

</pre> | </pre> | ||

| Line 384: | Line 962: | ||

<pre> | <pre> | ||

| − | + | perl /usr/local/CTK/CIMS.pl -n 10 -p -v --keep-cache -c cache_del Fox.pool.tag.uniq.bed Fox.pool.tag.uniq.del.bed Fox.pool.tag.uniq.del.CIMS.txt | |

| − | perl | + | |

| − | + | ||

</pre> | </pre> | ||

| − | By default, | + | By default, CIMS.pl will output all sites including those that are not statistically significant. This is recommended because one can play with stringency later. |

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#CIMS.pl|CIMS.pl]]. | ||

<pre> | <pre> | ||

| − | awk '{if($9<=0. | + | awk '{if($9<=0.001) {print $0}}' Fox.pool.tag.uniq.del.CIMS.txt | sort -k 9,9n -k 8,8nr -k 7,7n > Fox.pool.tag.uniq.del.CIMS.s30.txt |

| − | cut -f 1-6 | + | cut -f 1-6 Fox.pool.tag.uniq.del.CIMS.s30.txt > Fox.pool.tag.uniq.del.CIMS.s30.bed |

</pre> | </pre> | ||

| − | This will keep only those with FDR<0. | + | This will keep only those with FDR<0.001. Significant sites will also be sorted by FDR, then by the number of tags with mutations, and then by the total number of overlapping tags. One might try different thresholds to get a balance of sensitivity/specificity (e.g. as judged from motif enrichment). |

'''Note 1:''' Another parameter that might be useful to improve signal to noise is m/k (i.g., $8/$7 in awk) | '''Note 1:''' Another parameter that might be useful to improve signal to noise is m/k (i.g., $8/$7 in awk) | ||

| Line 407: | Line 985: | ||

<pre> | <pre> | ||

| − | + | ||

| − | + | awk '{print $1"\t"$2-10"\t"$3+10"\t"$4"\t"$5"\t"$6}' Fox.pool.tag.uniq.del.CIMS.s30.bed > Fox.pool.tag.uniq.del.CIMS.s30.21nt.bed | |

| + | #+/-10 around CIMS | ||

</pre> | </pre> | ||

| + | |||

| + | This BED file could be used to extract sequences around CIMS (pay attention to the strand), and search for enrichment of specific motif (e.g., UGCAUG for the case of Rbfox1-3) relative to the peak. | ||

| + | |||

| + | <pre> | ||

| + | perl /usr/local/CTK/bed2fasta.pl -l "-10" -r 10 -org mm10 -v Fox.pool.tag.uniq.del.CIMS.s30.21nt.bed Fox.pool.tag.uniq.del.CIMS.s30.21nt.fa | ||

| + | PatternMatch -c TGCATG Fox.pool.tag.uniq.del.CIMS.s30.21.fa > Fox.pool.tag.uniq.del.CIMS.s30.21nt.TGCATG.bed | ||

| + | </pre> | ||

| + | |||

| + | [http://zhanglab.c2b2.columbia.edu/data/CTK/CIMS.tar.gz Download CIMS output files here.] | ||

One can repeat these steps for the other types of mutations (i.e. substitutions and insertions). | One can repeat these steps for the other types of mutations (i.e. substitutions and insertions). | ||

| Line 415: | Line 1,003: | ||

=CITS analysis= | =CITS analysis= | ||

| − | + | Only certain variations of the CLIP protocol allow you to perform CITS analysis (e.g. iCLIP, BrdU CLIP, etc). | |

| + | Therefore, link only the BrdU CLIP files (i.e. exclude the Standard CLIP: Fox1_1, Fox2_1, Fox3_1). | ||

| + | <pre> | ||

| + | cd ~/test | ||

mkdir CITS | mkdir CITS | ||

cd CITS | cd CITS | ||

| − | ln -s ../CIMS/ | + | ln -s ../CIMS/Fox1_2.tag.uniq.del.bed ./ |

| − | ln -s ../mapping/ | + | ln -s ../CIMS/Fox1_3.tag.uniq.del.bed ./ |

| + | ln -s ../CIMS/Fox1_4.tag.uniq.del.bed ./ | ||

| + | ... | ||

| + | ln -s ../CIMS/Fox3_5.tag.uniq.del.bed ./ | ||

| + | |||

| + | ln -s ../mapping/Fox1_2.tag.uniq.bed ./ | ||

| + | ln -s ../mapping/Fox1_3.tag.uniq.bed ./ | ||

| + | ln -s ../mapping/Fox1_4.tag.uniq.bed ./ | ||

| + | ... | ||

| + | ln -s ../mapping/Fox3_5.tag.uniq.bed ./ | ||

| + | |||

| + | |||

| + | cat *tag.uniq.del.bed > Fox_BrdU.pool.tag.uniq.del.bed | ||

| + | cat *tag.uniq.bed > Fox_BrdU.pool.tag.uniq.bed | ||

</pre> | </pre> | ||

| Line 427: | Line 1,031: | ||

<pre> | <pre> | ||

| + | perl /usr/local/CTK/removeRow.pl -q 3 -f 3 -v Fox_BrdU.pool.tag.uniq.bed Fox_BrdU.pool.tag.uniq.del.bed > Fox_BrdU.pool.tag.uniq.clean.bed | ||

| + | </pre> | ||

| − | + | *Usage and additional explanation of [[CTK_usage#removeRow.pl|removeRow.pl]]. | |

| − | + | ||

| − | |||

Get the position before the start site as a potential cross link site that causes truncation. | Get the position before the start site as a potential cross link site that causes truncation. | ||

| Line 437: | Line 1,041: | ||

<pre> | <pre> | ||

| − | perl | + | perl /usr/local/CTK/bedExt.pl -n up -l "-1" -r "-1" -v Fox_BrdU.pool.tag.uniq.clean.bed Fox_BrdU.pool.tag.uniq.clean.trunc.bed |

</pre> | </pre> | ||

| + | *Usage and additional explanation of [[CTK_usage#bedExt.pl|bedExt.pl]]. | ||

| Line 446: | Line 1,051: | ||

<pre> | <pre> | ||

| − | perl | + | perl /usr/local/CTK/tag2cluster.pl -big -s -maxgap "-1" -of bed -v Fox_BrdU.pool.tag.uniq.bed Fox_BrdU.pool.tag.uniq.cluster.0.bed |

| − | awk '{if($5>2) {print $0}}' | + | awk '{if($5>2) {print $0}}' Fox_BrdU.pool.tag.uniq.cluster.0.bed > Fox_BrdU.pool.tag.uniq.cluster.bed |

</pre> | </pre> | ||

| + | |||

| + | |||

| + | *Usage and additional explanation of [[CTK_usage#tag2cluster.pl|tag2cluster.pl]]. | ||

| + | |||

Now we are ready for the CITS analysis: | Now we are ready for the CITS analysis: | ||

| Line 455: | Line 1,064: | ||

<pre> | <pre> | ||

| − | perl | + | perl /usr/local/CTK/tag2peak.pl -big -ss -v --prefix "CITS" -gap 25 -p 0.001 -gene Fox_BrdU.pool.tag.uniq.cluster.bed Fox_BrdU.pool.tag.uniq.clean.trunc.bed Fox_BrdU.pool.tag.uniq.clean.CITS.s30.bed |

</pre> | </pre> | ||

| − | '''Note 1:''' | + | |

| + | '''Note 1:''' One can now perform motif enrichment analysis as described above in the CIMS section. | ||

'''Note 2:''' In the command line above, we opt to merge sites very close to each other and keep the most significant ones because CITS analysis tends to have more background when read through is frequent. | '''Note 2:''' In the command line above, we opt to merge sites very close to each other and keep the most significant ones because CITS analysis tends to have more background when read through is frequent. | ||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | wc -l Fox_BrdU.pool.tag.uniq.clean.CITS.s30.bed | |

| − | + | 115363 Fox_BrdU.pool.tag.uniq.clean.CITS.s30.bed | |

| − | + | ||

| − | + | ||

| − | + | ||

</pre> | </pre> | ||

| − | + | [http://zhanglab.c2b2.columbia.edu/data/CTK/CITS.tar.gz Download CITS output files here.] | |

Revision as of 13:53, 8 August 2016

Contents

Introduction

Crosslinking and immunoprecipitation paired with highthroughput sequencing (HITS-CLIP or CLIP-Seq) has now been widely used to map protein-RNA interactions on a genome-wide scale. The CLIP Tool Kit (CTK) is a software package that provides a set of tools for analysis of CLIP data starting from the raw reads generated by the sequencer. It includes pipelines to filter and map reads, collapse PCR duplicates to obtain unique CLIP tags, define CLIP tag clusters and call peaks, and define the exact protein-RNA crosslink sites by CIMS and CITS analysis. This software package is an expansion of our previous CIMS package.

Crosslinking induced mutation site (CIMS) analysis is a computational method for CLIP-Seq data analysis to determine exact protein-RNA crosslink sites and thereby map protein-RNA interactions at single-nucleotide resolution. This method is based on the observation that UV cross linked amino-acid-RNA adducts introduce reverse transcription errors in cDNAs at certain frequencies, which are captured by sequencing and subsequent comparison of CLIP tags with a reference genome. More details of the biochemical and computational aspects of CLIP-Seq can be found in the following references:

Zhang, C. †, Darnell, R.B. † 2011. Mapping in vivo protein-RNA interactions at single-nucleotide resolution from HITS-CLIP data. Nat. Biotech. 29:607-614. Moore, J.*, Zhang, C.*, Grantman E.C., Mele, A., Darnell, J.C., Darnell, R.B. 2014. Mapping Argonaute and conventional RNA-binding protein interactions with RNA at single-nucleotide resolution using HITS-CLIP and CIMS analysis. Nat Protocols. 9(2):263-93. doi:10.1038/nprot.2014.012.

For crosslinking induced trunction analysis (CITS) described below, please refer to:

Weyn-Vanhentenryck,S.,M.*, Mele,A.*, Yan,Q.*, Sun,S., Farny,N., Zhang,Z., Xue,C., Herre,M., Silver,P.A., Zhang,M.Q., Krainer,A.R., Darnell,R.B. †, Zhang,C. † 2014. HITS-CLIP and integrative modeling define the Rbfox splicing-regulatory network linked to brain development and autism. Cell Rep. 10.1016/j.celrep.2014.02.005.

Versions

- v1.0.3 ( 08-08-2016 ), current

- improvement in software packaging and usage

- v1.0.0 ( 10-12-2015 )

- The initial beta release

Download

Source code

- czplib (perl): a perl library with various functions for genomic/bioinformatic analysis. download from github

- CTK (perl): the core algorithm. download from github

Sample dataset

Raw FASTQ files:

In this documentation, we will use the sample mouse brain Rbfox1-3 CLIP data (Weyn-Vanhentenryck et al. Cell Reports 2014) as a guide. Two protocols (standard CLIP and BrdU-CLIP) were used to generate this dataset.

The raw sequence files from Illumina sequencing can be downloaded from SRA (http://www.ncbi.nlm.nih.gov/sra/?term=SRP035321), and we assume FASTQ files have been generated using the sra toolkit (http://www.ncbi.nlm.nih.gov/Traces/sra/sra.cgi?cmd=show&f=software&m=software&s=software) and the following files Fox1_1.fastq.gz, Fox1_2.fastq.gz, Fox1_3.fastq.gz...Fox3_5.fastq.gz can be moved to the directory called fastq under the test folder in home directory (i.e., ~/test is our working directory). Fox1_1.fastq.gz, Fox2_1.fastq.gz, and Fox3_1.fastq.gz were generated from the Standard CLIP protocol, and the rest were generated from the BrdU CLIP protocol.

Download sample output files of major steps here:

- Fully preprocessed FASTQ files : output files generated for use right before mapping. The samples have been quality filtered, trimmed of 3' linker sequences, had exact duplicates collapsed, and barcodes stripped.

- Unique CLIP tag and mutation files : output files generated after mapping and collapsing PCR duplicates.

- CIMS output files : output files after CIMS analysis.

- CITS output files : output files after CITS analysis.

Prerequisites

This software is implemented in perl. It also relies on several standard linux/unix tools such as grep, cat, sort, etc. We have tested the software on RedHat Linux (Linux 2.6.32-504.8.1.el6.x86_64), although it is expected to work on most unix-like systems, including Mac OS X. In addition, several software packages are required by the pipeline for sequence preprocessing and alignment (the version number in our test is also indicated).

- FASTX Tool-Kit Version 0.0.13: http://hannonlab.cshl.edu/fastx_toolkit/download.html

- Burrows Wheeler Aligner (BWA) Version 0.7.12: http://bio-bwa.sourceforge.net/

- Samtools Version 1.3.1: http://samtools.sourceforge.net

- Perl Version 5.14.3 was used for testing, but we expect that newer versions of Perl will also be compatible: https://www.perl.org/get.html

- Perl library Math::CDF Version 0.1: http://search.cpan.org/~callahan/Math-CDF-0.1/CDF.pm

Installation

- Download and install software packages described in prerequisites.

- Download the czplib perl library files (refer back to Download section above)

- Decompress and move to whatever directory you like (as an example, we use /usr/local/lib/)

- Replace "x.tgz" below with the version of the package you downloaded

$tar zxvf czplib.v1.0.x.tgz $mv czplib /usr/local/lib/

Add the library path to the environment variable, so perl can find it.

PERL5LIB=/usr/local/lib/czplib

- Download CTK code and likewise decompress and move to whatever directory you like (as an example, we use /usr/local/)

$tar zxvf CTK.v1.0.x.tgz $cd CTK $cd .. $mv CTK /usr/local/

Add the dir to your $PATH environment variable if you would like.

Finally, some of the scripts will use a cache directory, which is under the working directory by default. One can specify another folder for cache using environment variable.

#e.g., add the following lines in .bash_profile CACHEHOME=$HOME/cache export CACHEHOME

Read preprocessing

Compared to our previous software package, this new pipeline, in all preprocessing steps prior to read alignment, operates on FASTQ files to take advantage of sequence quality scores.

Sample dataset

In our current experimental design (BrdU-CLIP), several CLIP libraries with different indexes are typically pooled together to be sequenced in one lane.

The sample dataset we use as a guide (mouse brain Rbfox1-3 CLIP) was generated using the standard CLIP or BrdU-CLIP protocols (see the table below; see the Download section if you have not downloaded them). We assume FASTQ files have been generated using the sra toolkit (http://www.ncbi.nlm.nih.gov/Traces/sra/sra.cgi?cmd=show&f=software&m=software&s=software) and the following files Fox1_1.fastq.gz, Fox1_2.fastq.gz, Fox1_3.fastq.gz...Fox3_5.fastq.gz can be moved to the directory called fastq under the test folder in home directory (i.e., ~/test is our working directory). Fox1_1.fastq.gz, Fox2_1.fastq.gz, and Fox3_1.fastq.gz were generated from the Standard CLIP protocol, and the rest were generated from the BrdU CLIP protocol.

Reads from the standard CLIP protocol begin with a 5nt random barcode, followed by the actual cDNA tags of variable lengths, and possibly 3' adaptor sequences.

Reads from the BrdU-CLIP protocol begin with a 14 nucleotides that are sample index and random barcode (4 nt sample index+ a fixed base (either G or C)+ an 8-nt random barcode + a G), followed by the actual cDNA tags of variable lengths, and possibly 3' adaptor sequences.

The number of reads in each file, after each step of processing, is summarized in Table 1 below.

| Protein | Sample | CLIP protocol | # of raw reads | # of filtered reads | # of trimmed reads | # of collapsed reads | # of mapped reads | # of unique tags |

|---|---|---|---|---|---|---|---|---|

| Fox1 | Fox1_1 | Standard | 128,502,865 | 124,979,444 | 113,958,244 | 4,953,805 | 3,713,799 | 357,094 |

| Fox1 | Fox1_2 | BrdU | 42,373,740 | 39,121,986 | 35,683,047 | 1,465,789 | 1,098,974 | 94,757 |

| Fox1 | Fox1_3 | BrdU | 36,103,020 | 32,500,181 | 29,682,907 | 2,112,635 | 1,468,725 | 161,487 |

| Fox1 | Fox1_4 | BrdU | 64,528,510 | 52,712,783 | 48,292,964 | 7,025,945 | 5,262,144 | 1,194,617 |

| Fox2 | Fox2_1 | Standard | 135,171,343 | 131,229,217 | 121,238,246 | 5,406,496 | 3,716,401 | 295,180 |

| Fox2 | Fox2_2 | BrdU | 42,744,272 | 39,351,367 | 35,005,375 | 1,314,878 | 968,115 | 83,358 |

| Fox2 | Fox2_3 | BrdU | 31,512,102 | 28,530,199 | 25,877,412 | 2,356,210 | 1,581,335 | 337,811 |

| Fox2 | Fox2_4 | BrdU | 46,383,375 | 40,317,796 | 29,418,988 | 2,761,500 | 1,803,408 | 165,645 |

| Fox3 | Fox3_1 | Standard | 181,578,804 | 173,884,893 | 162,979,630 | 10,405,177 | 7,742,410 | 877,256 |

| Fox3 | Fox3_2 | BrdU | 38,828,880 | 35,983,470 | 27,171,512 | 1,185,180 | 857,925 | 143,522 |

| Fox3 | Fox3_3 | BrdU | 31,774,690 | 29,095,065 | 26,332,663 | 2,295,078 | 1,669,958 | 399,878 |

| Fox3 | Fox3_4 | BrdU | 32,658,459 | 29,720,851 | 21,737,823 | 2,017,979 | 1,479,115 | 413,847 |

| Fox3 | Fox3_5 | BrdU | 58,175,879 | 47,324,888 | 42,941,116 | 6,200,102 | 4,551,275 | 1,076,782 |

| Total | 870,706,469 | 5,601,234 |

Demultiplexing samples

The files downloaded from SRA have already be demultiplexed. Therefore the next step has already been performed. It is included for illustration purposes for the user who would like to start from a FASTQ file consisting of multiple libraries.

cd ~/test/fastq

Assuming that we have six samples with indexes GTCA, GCATG, ACTG, AGCT, GCATC, TCGA, the following script will extract reads for each library using a simple loop.

#!/bin/bash

#For illustration

index=(GTCA GCATG ACTG AGCT GCATC TCGA);

samples=(sample1 sample2 sample3 sample4 sample5 sample6);

for ((i=0;i<=5;i=i+1)); do

idx=${index[$i]}

name=${samples[$i]}

perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:$idx -of fastq raw.fastq.gz - | gzip -c > $name.fastq.gz

done

Alternatively, we can parallelize the process by submitting an array of jobs to SGE.

#!/bin/bash

#$ -t 1-6 -m a -cwd -N CLIP

#For illustration

index=(GTCA GCATG ACTG AGCT GCATC TCGA);

samples=(sample1 sample2 sample3 sample4 sample5 sample6);

idx=${index[$SGE_TASK_ID-1]}

name=${samples[$SGE_TASK_ID-1]}

perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:$idx -of fastq raw.fastq.gz - | gzip -c > $name.fastq.gz

The script /usr/local/CTK/fastq_filter.pl will extract reads with indicated index sequence (e.g., GTCA) starting from position 0 (specified by the -index option) in the read.

- Usage and additional explanation of fastq_filter.pl

Note 1: This script can also be used to demultiplex samples from other CLIP protocols as long as the position relative to the read start is fixed.

Note 2: To get usage information, just run the script without any parameters (the same for the other scripts in the package).

Read quality filtering

cd ~/test mkdir filtering cd filtering

The same fastq_filter.pl script will extract reads passing quality filters for each library (of standard CLIP-seq data) using a simple loop.

#!/bin/bash for f in Fox1_1 Fox2_1 Fox3_1 do perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-29:20 -of fastq ../fastq/$f.fastq.gz $f.fastq done

This is the same thing, but using a Sun Grid Engine to execute this UNIX job on a remote machine.

#!/bin/bash

#$ -t 1-3 -m a -cwd -N CLIP

files=(Fox1_1 Fox2_1 Fox3_1);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-29:20 -of fastq ../fastq/$f.fastq.gz $f.fastq

This script filters raw reads based on quality scores. -f mean:0-29-20 specifies a mean score of 20 or above in the first 30 bases (i.e., positions 0-29 for Standard CLIP), which includes 5 positions with sample indexes and the random barcode, followed by 25 positions with the actual CLIP tag.

The filtering should be run again on the BrdU CLIP (or iCLIP) samples slightly differently.

#!/bin/bash

#$ -t 1-10 -m a -cwd -N CLIP

files=(Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/fastq_filter.pl -v -if sanger -f mean:0-38:20 -of fastq ../fastq/$f.fastq.gz $f.fastq

-f mean:0-38-20 specifies a mean score of 20 or above in the first 39 bases (i.e., positions 0-38 for BrdU CLIP), which includes 14 positions with sample indexes and the random barcode, followed by 25 positions with the actual CLIP tag.

The reason we filter as such is because low quality reads can introduce mapping errors and background. They will inflate the number of unique tags after removal of PCR duplicates.

Note 1: The reason we used the first four positions of the index for demultiplexing is due to historical confusion about the identity of the index sequences. It really should be 5nt, but it does not make a substantial difference.

Note 2: As a good practice, always check the number of reads in the raw files and compare the number of reads left after filtering to confirm if the numbers are what you would expect. This can be done by running the command:

#for raw reads zcat ../fastq/*.fastq.gz | wc -l #for filtered reads wc -l *.fastq

The wc command will give the number of lines (divide by 4 to get the actual read number).

Note 3: Demultiplexing and quality filtering can be combined into a single step by specifying -index and -f at the same time.

perl /usr/local/CTK/fastq_filter.pl -v -if sanger -index 0:GTCA -f mean:0-38:20 -of fastq ../fastq/in.fastq.gz - | gzip -c > out.fastq.gz

Trimming of 3' linker sequences

For long reads that are common now, collapsing before trimming is not very helpful. Therefore, we trim the 3' linker first (which can vary depending on the specific CLIP protocol performed).

For each sample, run the following command using tools provided by the Fastx Toolkit.

#!/bin/bash

#$ -t 1-3 -m a -cwd -N CLIP

#-l "mem=2G,time=192::"

files=(Fox1_1 Fox2_1 Fox3_1);

f=${files[$SGE_TASK_ID-1]}

fastx_clipper -a GTGTCAGTCACTTCCAGCGG -l 20 -n -i $f.fastq | fastq_quality_trimmer -t 5 -l 20 -o $f.trim.fastq #note that the 3' linker will vary for other CLIP protocol variations.

This command will remove the 3' linker and/or also remove extremely low quality bases (score < 5).

You can do the same for the BrdU CLIP files:

#!/bin/bash

#$ -t 1-10 -m a -cwd -N CLIP

#-l "mem=2G,time=192::"

files=(Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

fastx_clipper -a TCGTATGCCGTCTTCTGCTTG -l 29 -n -i $f.fastq | fastq_quality_trimmer -t 5 -l 29 -o $f.trim.fastq

For fastx_clipper usage information:

fastx_clipper -h

Note 1: It is good to check the number of reads by running the command:

wc -l *.trim.fastq

Collapse exact duplicates

If multiple reads have exactly the same sequence, only one is kept.

#!/bin/bash

#$ -t 1-13 -m a -cwd -N CLIP

files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/fastq2collapse.pl $f.trim.fastq $f.trim.c.fastq

- Usage and additional explanation of fastq2collapse.pl.

Note 1: It is good to check the number of reads by running the command:

wc -l *.trim.c.fastq

Strip barcode

The following script removes the 5' degenerate barcode.

#The barcode for standard CLIP is 5 nucleotides long

for f in Fox1_1 Fox2_1 Fox3_1

do

perl /usr/local/CTK/stripBarcode.pl -format fastq -len 5 $f.trim.c.fastq $f.trim.c.tag.fastq

done

#the barcode for BrdU CLIP is 14 nucleotides long

for f in Fox1_2 Fox1_3 Fox1_4 Fox2_2 Fox2_3 Fox2_4 Fox3_2 Fox3_3 Fox3_4 Fox3_5

do

perl /usr/local/CTK/stripBarcode.pl -format fastq -len 14 $f.trim.c.fastq $f.trim.c.tag.fastq

done

Note 1: We include sample index as part of the random barcode here.

- Usage and additional explanation of stripBarcode.pl.

After this step, one can get the distribution of tag length for diagnostic purposes.

awk '{if(NR%4==2) {print $0}}' $f.trim.c.tag.fastq | awk '{print length($0)}' | sort -n | uniq -c | awk '{print $2"\t"$1}' > $f.trim.c.tag.seqlen.stat.txt

You can download fully preprocessed FASTQ files here or alternatively use command:

wget http://zhanglab.c2b2.columbia.edu/data/CTK/preprocess.tar.gz tar -zxvf preprocess.tar.gz

Read mapping & parsing

We are now using BWA (version 0.7.12) for alignment instead of novoalign for two reasons:

- novoalign is slower than some of the other algorithms that becomes available, in part because the academic version of novoalign does not allow multi threading.

- BWA allows one to specify mismatch rate instead of the the absolute number, which is more appropriate for tags of different sizes (i.e. a smaller number of mismatches allowed for shorter tags after trimming).

Indexing reference genome

This step needs to be done only once.

After you have installed BWA, prepare a reference genome:

For example, build a reference mm10 genome. Download the reference genome here: http://ccb.jhu.edu/software/tophat/igenomes.shtml. In this case, make sure you are downloading the "Mus musculus UCSC MM10" reference.

wget ftp://igenome:G3nom3s4u@ussd-ftp.illumina.com/Mus_musculus/UCSC/mm10/Mus_musculus_UCSC_mm10.tar.gz tar -xvf Mus_musculus_UCSC_mm10.tar.gz cd /Mus_musculus_UCSC_mm10/Mus_musculus/UCSC/mm10/Sequence/Chromosomes

Change the chromosome header and combine the chromosomes into a full genome. Note that we exclude random chromosomes and the mitochondria chromosome in our analysis.

sed -i -- "s/chr//g" *.fa cat ch1.fa chr2.fa chr3.fa ... chrX.fa chrY.fa > mm10.fa #The chromosomes included in the index should not include random chromosomes or chrM.

Finally, create a BWA index and move it to a directory you like. In this example, the index is in the /genomes/mm10/bwa/ directory.

cd /genomes/mm10/bwa/ bwa index -a bwtsw mm10.fa

Read mapping

Now that the reference index has been prepared, you can proceed with mapping/alignment.

cd ~/test mkdir mapping cd mapping

Run bwa to align the reads to the reference genome.

#!/bin/bash

#$ -t 1-13 -m a -cwd -N CLIP

files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

bwa aln -t 4 -n 0.06 -q 20 /genomes/mm10/bwa/mm10.fa ../filtering/$f.trim.c.tag.fastq > $f.sai

bwa samse /genomes/mm10/bwa/mm10.fa $f.sai ../filtering/$f.trim.c.tag.fastq > $f.sam

#the two steps can also be combined, using the following command:

#bwa aln -t 4 -n 0.06 -q 20 /genomes/mm10/bwa/mm10.fa ../filtering/$f.trim.c.tag.fastq | bwa samse /genomes/mm10/bwa/mm10.fa - ../filtering/$f.trim.c.tag.fastq > $f.sam

The option -n 0.06 specifies slightly more stringent criteria than the default. The number of allowed mismatches (substitutions or indels) depending on read length is as follows:

[bwa_aln] 17bp reads: max_diff = 1 [bwa_aln] 20bp reads: max_diff = 2 [bwa_aln] 45bp reads: max_diff = 3 [bwa_aln] 73bp reads: max_diff = 4 [bwa_aln] 104bp reads: max_diff = 5 [bwa_aln] 137bp reads: max_diff = 6 [bwa_aln] 172bp reads: max_diff = 7 [bwa_aln] 208bp reads: max_diff = 8 [bwa_aln] 244bp reads: max_diff = 9

The -q option is used to trim low quality reads (an average of 20 or below). However, this does not seem to do too much after the trimming steps above.

Parsing SAM file

#!/bin/bash

#$ -t 1-13 -m a -cwd -N CLIP

files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/parseAlignment.pl -v --map-qual 1 --min-len 18 --mutation-file $f.mutation.txt $f.sam $f.tag.bed

This will keep only unique mappings (with MAPQ >=1) and a minimal mapping size of 18 nt.

Note 1: In the tag bed file, the 5′ column records the number of mismatches (substitutions) in each read

Note 2: Other aligners might not use a positive MAPQ as an indication of unique mapping.

Another useful option is --indel-to-end, which specifies the number of nucleotides towards the end from which indels should not be called (default=5 nt).

Note 3: The parsing script relies on MD tags, which is an optional field without strict definition in SAM file format specification. Some aligners might have slightly different format how they report mismatches. If other aligners than bwa is used, one should run the following command:

samtools view -bS $f.sam | samtools sort - $f.sorted samtools fillmd $f.sorted.bam /genomes/mm10/bwa/mm10.fa > $f.sorted.md.sam

This will ensure the sam file gets parsed properly.

- Usage and additional explanation of parseAlignment.pl.

Note 4: Keep track what proportion of reads can be mapped uniquely.

wc -l *.tag.bed

Note 4: the format of the mutation file is essentially the same as before.

Collapsing PCR duplicates

It is critical to collapse PCR duplicates, not only for the exact duplicates collapsed above, but also for those with slight differences due to sequencing errors.

#!/bin/bash

#$ -t 1-13 -m a -cwd -N CLIP

files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/tag2collapse.pl -v -big --random-barcode -EM 30 --seq-error-model alignment -weight --weight-in-name --keep-max-score --keep-tag-name $f.tag.bed $f.tag.uniq.bed

- Usage and additional explanation of tag2collapse.pl.

Note 1: Sequencing errors in the degenerate barcodes are estimated from results of read alignment. In addition, the number of substitutions must be provided in the 5th column. Note that the read ID in the 4th column must take the form READ#x#NNNNN, where x is the number of exact duplicates and NNNNN is the bar-code nucleotide sequence appended to read IDs in previous steps. Read IDs that are not in this format will generate an error.

Get the mutations in unique tags

#!/bin/bash

#$ -t 1-13 -m a -cwd -N CLIP

files=(Fox1_1 Fox1_2 Fox1_3 Fox1_4 Fox2_1 Fox2_2 Fox2_3 Fox2_4 Fox3_1 Fox3_2 Fox3_3 Fox3_4 Fox3_5);

f=${files[$SGE_TASK_ID-1]}

perl /usr/local/CTK/selectRow.pl -q 3 -f 3 $f.mutation.txt $f.tag.uniq.bed > $f.tag.uniq.mutation.txt

#or alternatively, use the following python script

#python /usr/local/CTK/joinWrapper.py $f.mutation.txt $f.tag.uniq.bed 4 4 N $f.tag.uniq.mutation.txt

#the parameters 4 4 indicate the columns in the two input file used to join the two, and N indicates that only paired rows should be printed

- Usage and additional explanation of selectRow.pl.

Table 2 summarizes the number of unique mutations of different types in each sample.

| Protein | Sample | CLIP protocol | # of unique tags | Deletions | Insertions | Substitutions |

|---|---|---|---|---|---|---|

| Fox1 | Fox1_1 | Standard | 357,094 | 30,675 | 4,705 | 112,837 |

| Fox1 | Fox1_2 | BrdU | 94,757 | 2,742 | 2,022 | 29,840 |

| Fox1 | Fox1_3 | BrdU | 161,487 | 7,348 | 3,138 | 47,372 |

| Fox1 | Fox1_4 | BrdU | 1,194,617 | 43,953 | 11,012 | 342,695 |

| Fox2 | Fox2_1 | Standard | 295,180 | 26,791 | 6,134 | 103,992 |

| Fox2 | Fox2_2 | BrdU | 83,358 | 1,192 | 1,832 | 25,128 |

| Fox2 | Fox2_3 | BrdU | 337,811 | 10,911 | 3,442 | 75,912 |

| Fox2 | Fox2_4 | BrdU | 165,645 | 2,292 | 5,009 | 67,585 |

| Fox3 | Fox3_1 | Standard | 877,256 | 51,536 | 13,896 | 27,1740 |

| Fox3 | Fox3_2 | BrdU | 143,522 | 6,058 | 2,059 | 34,729 |

| Fox3 | Fox3_3 | BrdU | 399,878 | 17,004 | 3,499 | 73,882 |

| Fox3 | Fox3_4 | BrdU | 413,847 | 17,195 | 3,153 | 72,674 |

| Fox3 | Fox3_5 | BrdU | 1,076,782 | 38,700 | 10,790 | 374,446 |

| Total | 5,601,234 | 256,397 | 70,691 | 1,632,832 |

You can download unique tag and mutation files here.

After getting the unique tags of each library, one might concatenate biological replicates, which are distinguished by different colors. As an example:

perl /usr/local/CTK/bed2rgb.pl -v -col "128,0,0" Fox1_1.tag.uniq.bed Fox1_1.tag.uniq.rgb.bed ... cat *tag.uniq.rgb.bed > Fox.pool.tag.uniq.rgb.bed cat *tag.uniq.mutation.txt > Fox.pool.tag.uniq.mutation.txt

- Usage and additional explanation of bed2rgb.pl.

We also concatenate the BrdU CLIP BED files separately for CITS analysis downstream in this pipeline.